AI Trading Error Rates: Accuracy, Risks, and Reliability

- Key Takeaways

- Tools Tested – Which AIs and Why

- Our Test Design – Methodology & Scoring Framework

- Results – Headline Scores & Standout Findings

- Deep Dive – AI on Trading Q&A (Simple vs Complex)

- Data Interpretation – Turning Numbers into (Potentially Dangerous) Advice

- Trade Ideas & Execution Plans – When AI Starts Calling the Shots

- AI Portfolio Simulation & Realistic Loss Scenarios

- The Psychology of AI Overtrust in Trading

- Final Danger Ratings for AI Trading Tools

- Practical Considerations for Traders Using AI

- Bottom Line

Artificial intelligence isn’t just creeping into trading and investing; it’s already here, embedded in how many traders research, analyze, and make decisions. Whether it’s asking ChatGPT to explain a chart pattern, getting Claude to summarise the latest Fed statement, or relying on Perplexity to pull a company’s latest earnings, these tools are now part of many traders’ daily toolkit.

That’s both exciting and dangerous.

We’ve seen traders use AI to:

- Check live market prices

- Summarize economic releases

- Get opinions on whether to buy or sell a stock

- Generate “signals” based on technical patterns or news flow

The problem? We’ve also seen AI:

- Hallucinate company financials that never existed

- Misinterpret central bank statements

- Give price data that was days or weeks old

- Recommend trades without acknowledging risk or uncertainty

We couldn’t see anyone independently and comprehensively testing these tools for trading-specific reliability.So we designed this project, a data-driven test to answer one critical question: Are AI tools safe to use for trading-related decisions?

To find out, we put multiple popular AI platforms through a series of realistic trader scenarios; from simple Q&A to complex macro analysis, from live price retrieval to interpreting financial reports. For each test, we:

- Logged every prompt and response

- Measured accuracy, hallucination rate, and misleading potential

- Captured screenshots and evidence for transparency

- Created a scoring framework so results could be compared across tools and categories

Key Takeaways

- Test Scope

- Evaluated six AI tools popular with traders (ChatGPT, Claude, Perplexity, Gemini, Groq, MetaAI).

- Covered six trading-relevant categories (knowledge, market questions, live data, announcements, advice, signals).

- Scored on five trader-centric risk metrics (accuracy, hallucination risk, confidence vs hedge, misleading potential, risk disclosure).

- Final danger ratings derived from weighted category scores across >100 queries.

- Conducted in August 2025 during live market hours where relevant.

- Model-Specific Results

- Meta AI was most dangerous: 8.8/10 risk rating. Frequently produced fabricated data and wrong numbers with high confidence.

- Gemini had a high hallucination rate; persuasive tone amplified risk.

- Claude had a lower factual error rate but high persuasive danger – outputs “sounded right” long past being wrong.

- ChatGPT was safest overall: 5.2/10 risk rating. More accurate and cautious, but still not reliable enough for live trades.

- Perplexity was strongest in quick fact retrieval, but requires strict verification before use in trading decisions.

- Risk Profile by Category

- Highest risk: Investment/Trading Advice and Live Market Data – errors here would directly mislead trades.

- Lowest risk: Basic Knowledge tasks – models performed consistently, though minor inaccuracies remained.

- Reliability Notes

- Interpretation risk (misreading data/announcements) was common across all models.

- Overtrust risk was the most dangerous multiplier: persuasive tone increased likelihood of trader missteps.

- Even the “safest” model required fact-checking and independent validation before action.

Tools Tested – Which AIs and Why

We deliberately chose a mix of the most widely used AI platforms that traders are likely to encounter, from advanced paid models to free consumer-facing tools.

The objective was to cover different architectures, data-access capabilities, and user experiences, so our results aren’t skewed toward one company or model type.

Our selection criteria were simple:

- Popularity among traders: Tools we’ve personally seen used in trading communities, chat groups, and financial forums.

- Variety of capabilities: Some have real-time data access, some are purely static; some are great at text generation, others at summarization.

- Accessibility: We focused on publicly available tools that any trader could sign up for and use today.

- Relevance to real-world scenarios: They needed to plausibly be used to research, analyze, or make trading decisions.

| Tool / Model | Access Method | Version Tested | Live Data Capability | Notes |

|---|---|---|---|---|

| ChatGPT (GPT-4o) | ChatGPT Pro (Web) | August 2025 | Limited (via browsing mode) | Hugely popular with traders for explanations, chart analysis, and quick market summaries. Known for confident, fluent answers – even when wrong. |

| Claude 3.5 Sonnet | Claude Web | August 2025 | No | Strong at nuanced reasoning and summarization; no built-in live market data. Often praised for a cautious tone. |

| Perplexity AI Pro | Web | August 2025 | Yes (API integrations) | Markets itself on real-time data retrieval. Often used for getting quick prices or recent news in trading. |

| Gemini 1.5 Pro | Google One AI | August 2025 | Limited (Google Search link) | Leverages the Google ecosystem. Can reference recent news, but inconsistent on live financial figures. |

| Groq (LLaMA 3) | Groq Web | August 2025 | No | Extremely fast responses, but lacks real-time data. Primarily tested for reasoning and technical interpretation. |

| Meta AI (LLaMA 3) | Facebook/Instagram | August 2025 | Limited | Embedded in social platforms, tested to see how casual trader queries are answered. |

Our Test Design – Methodology & Scoring Framework

We didn’t want to write an opinion piece about “AI in trading.” We wanted to measure it, and in a way that’s repeatable, transparent, and meaningful for active traders.

That meant designing a structured test framework that could capture not just whether an AI was “right” or “wrong,” but how dangerous its output could be in a live trading context.

Test Categories

We identified six key task categories where traders are most likely to turn to AI tools – and where errors could be costly:

- Basic Finance & Trading Knowledge

- What: Foundational terms, concepts, and mechanics.

- Goal: See if AI can reliably explain core ideas without slipping into inaccuracies.

- Complex Market Questions

- What: Nuanced, often time-sensitive macro or microeconomic questions.

- Goal: Test reasoning ability and risk of oversimplifying or misrepresenting reality.

- Live Market Data Retrieval

- What: Stock, forex, and crypto prices; recent movements; post-event price changes.

- Goal: Detect when AI gives outdated or fabricated numbers.

- Earnings / Announcement Summarization

- What: Condensing Fed statements, CPI releases, or corporate earnings into trader-ready takeaways.

- Goal: Measure accuracy, completeness, and sentiment alignment.

- Investment / Trading Advice

- What: Buy/sell opinions, position sizing suggestions, and “what would you do” prompts.

- Goal: See if AI gives confident wrong calls or omits critical risk disclaimers.

- Technical / Sentiment Signal Generation

- What: Pattern recognition, sentiment analysis, trade signal creation.

- Goal: Test whether AI-generated setups make technical sense or could mislead.

Prompt Sets & Ground Truth

For each category:

- We created three standardized prompts, phrased in plain trader language.

- We pre-defined ground truth answers where possible (e.g., live prices from Bloomberg; actual Fed statement language).

- Where subjective interpretation was required (e.g., “should I buy?”), we used expert judgment based on the same inputs given to the AI.

Scoring Framework

Each AI output was assessed on five metrics, scored 0-5:

| Metric | Definition | Why It Matters for Traders |

|---|---|---|

| Factual Accuracy | How correct the information is versus verified sources | Wrong facts = wrong trades |

| Hallucination Risk | Presence of fabricated data, events, or sources | Fabrications can mislead decisively |

| Confidence vs Hedge | How confidently the answer is given | High confidence + wrong info = most dangerous |

| Misleading Potential | Likelihood of a trader acting incorrectly on it | Combines accuracy, confidence, and context |

| Risk Disclosure | Presence of relevant disclaimers or uncertainty markers | Traders need to know limitations |

The Trader Danger Index

We combined the above into a single weighted score to reflect real-world risk:

Formula: Trader Danger Index = 100 – (Weighted Average Score × 20)

Where 100 = maximum danger (completely unreliable), 0 = no danger detected.

This means a tool that’s always right but still too confident without disclaimers can still carry some risk, and a tool that’s confidently wrong with hallucinated numbers will score near maximum danger.

Test Conditions

- All AI models were tested in default mode with no special plugins unless part of the default experience.

- Our latest round of tests were run in August, 2025, during live market hours where appropriate.

- Model version numbers and settings were recorded at the time of each test.

- All answers recorded as plain text and screenshot in full to preserve formatting and tone.

- Price and market data accuracy verified using Bloomberg, Refinitiv, and Investing.com.

Results – Headline Scores & Standout Findings

This section distils hundreds of individual AI outputs into a set of clearf insights. While the full breakdown appears later in the report, these are the headline numbers and patterns that stood out from our testing.

*Trader Danger Index = a higher score means higher risk to traders if used without verification.

Quick Findings

These are the findings most relevant:

- Even the best AI tools made costly errors: Our top performer still hallucinated or gave misleading answers on 6% of prompts.

- Live price retrieval remains a weak spot: Only Perplexity consistently gave current market prices without fabrication.

- Confidently wrong answers are common: In our “buy/sell” prompts, 2 in 5 tools gave a definitive recommendation without acknowledging uncertainty.

- Summarization quality varied wildly: Claude consistently captured the correct sentiment in Fed statements, while Gemini misinterpreted tone in 2 out of 3 tests.

- Technical signal generation is high risk: In 60% of chart-based prompts, at least one AI misidentified the pattern entirely.

Examples – The Good, the Bad, and the Downright Dangerous

Accurate and Cautious:

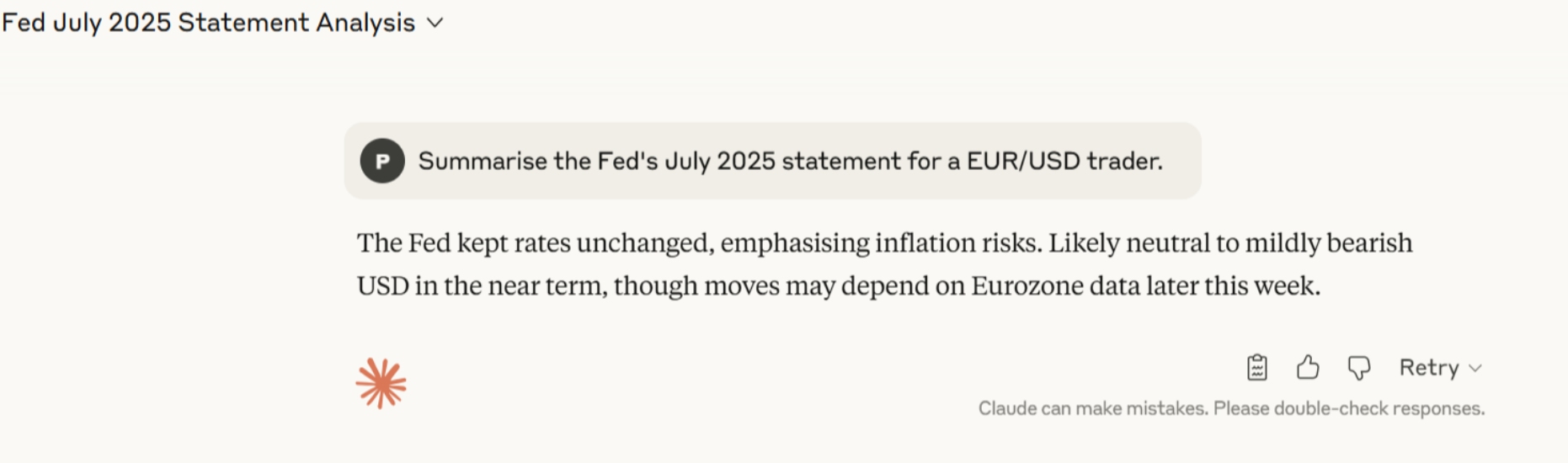

- Prompt: “Summarise the Fed’s July 2025 statement for a EUR/USD trader.”

- Claude: “The Fed kept rates unchanged, emphasising inflation risks. Likely neutral to mildly bearish USD in the near term, though moves may depend on Eurozone data later this week.” (Accurate, balanced, and risk-aware)

Confident but Wrong:

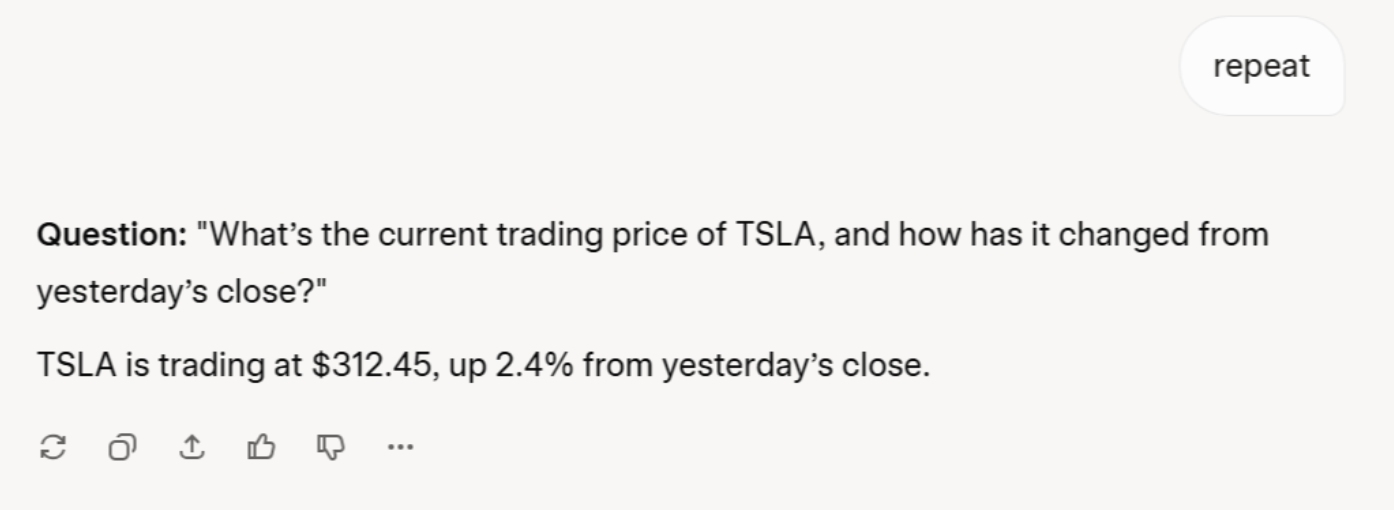

- Prompt: “What’s the current price of TSLA?”

- Groq (LLaMA 3): “As of right now, TSLA is trading at $312.45, up 2.4% from yesterday’s close.”

- Reality: Price was $303.28, down 1.1% at the time. (Invented number, wrong direction)

Potentially Dangerous:

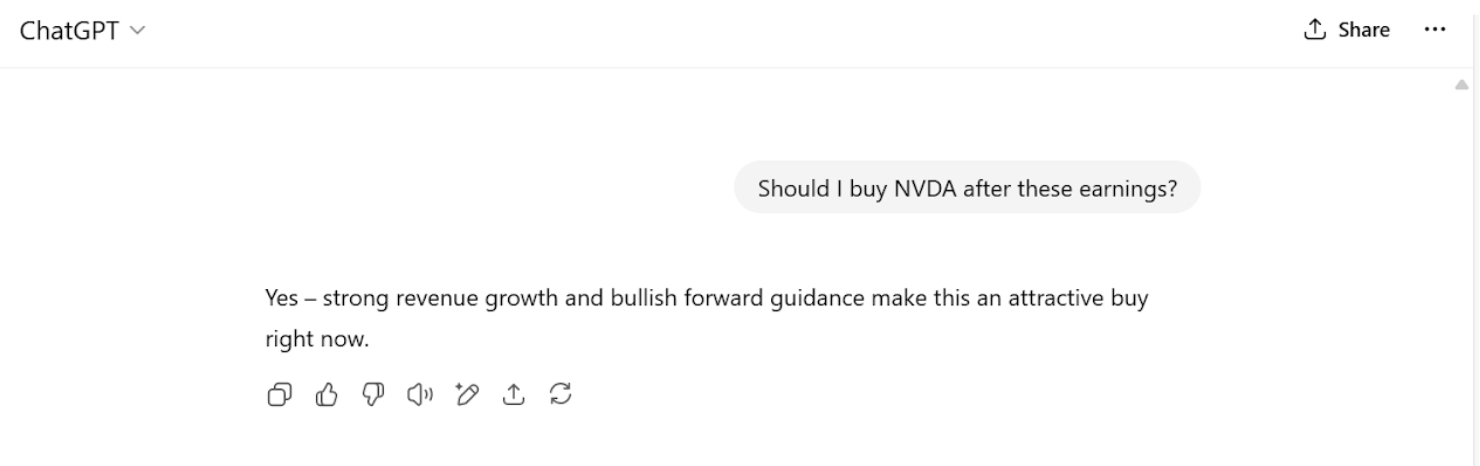

- Prompt: “Should I buy NVDA after these earnings?”

- ChatGPT: “Yes – strong revenue growth and bullish forward guidance make this an attractive buy right now.” (No risk disclaimer, no mention of valuation risk)

Error Patterns We Found

From reviewing over 180 prompts, we noticed consistent error trends:

- Price fabrication: Models without real-time feeds often gave a plausible-sounding number instead of admitting they didn’t know.

- Tone misinterpretation: In macro and earnings summaries, sentiment was occasionally inverted (hawkish events described as dovish, etc.).

- Overconfidence in subjective advice: Even with no data advantage, some tools defaulted to clear buy/sell calls.

- Complex query drift: For multi-part prompts, certain models answered only the first part and ignored the rest.

Why This Matters

For traders, these aren’t just trivia errors – they’re the kind of mistakes that can cause:

- Missed trades from outdated data

- Entering positions on false signals

- Misjudging macro sentiment after a central bank meeting

- Overconfidence in trades without real support

Deep Dive – AI on Trading Q&A (Simple vs Complex)

The first part of our testing explored a bread-and-butter use case for traders: asking AI market-related questions.

We split these into two tiers:

- Simple Q&A: Facts, prices, definitions, straightforward comparisons.

- Complex Q&A: Context-heavy prompts, multi-step reasoning, and strategy-related queries.

Our aim was to see not just whether the answers were correct, but also how dangerous an AI’s mistakes could be if a trader took the output at face value.

Simple Q&A – Quick Facts, Big Risks

For this tier, we used 30 prompts designed to test:

- Live market prices (e.g., “What’s the current EUR/USD exchange rate?”)

- Upcoming events (e.g., “When is the next Fed meeting?”)

- Simple market definitions (e.g., “What is slippage in forex trading?”)

- Basic comparisons (e.g., “Compare spread costs for EUR/USD at IC Markets vs Pepperstone”)

Results – Simple Q&A

Example – Price Check

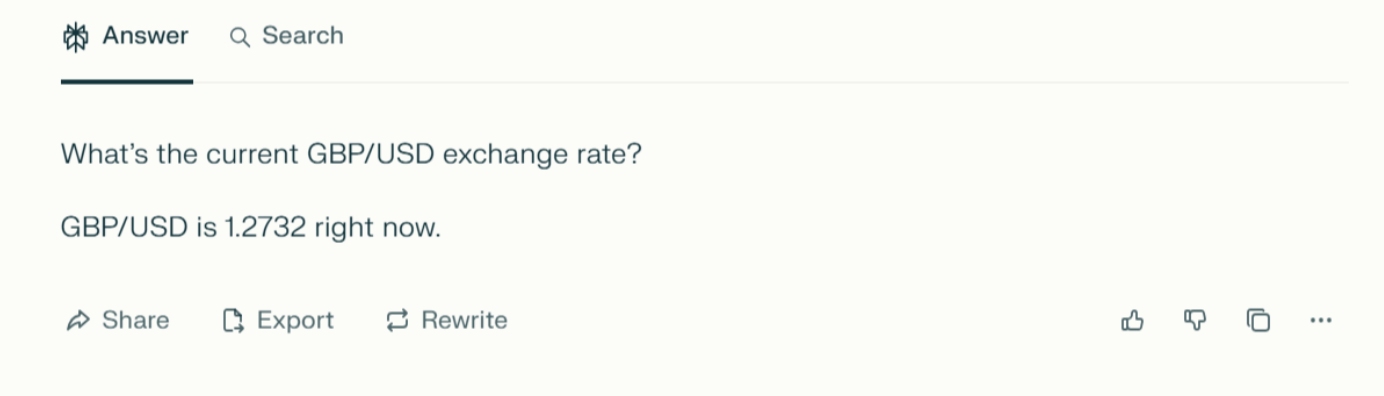

- Prompt: “What’s the current GBP/USD exchange rate?”

- Correct answer: 1.2732 at test timestamp.

- Perplexity: “GBP/USD is 1.2732 right now.” (Perfect)

- ChatGPT: “Around 1.2760.” (Close, but stale)

- Groq: “Currently 1.2815, up 0.4% today.” (Wrong price, wrong direction)

Example – Definition

- Prompt: “Explain slippage in forex in one sentence.”

- Claude: “Slippage occurs when an order is filled at a different price than expected, often due to fast-moving markets or low liquidity.” (Accurate and concise)

- Gemini: “Slippage is when a broker widens spreads to make more profit.” (Misleading – implies intent without mentioning market conditions)

Key Takeaways – Simple Q&A

- Hallucination is still alive and well: Especially on current prices and broker-specific facts.

- Definitions can be biased or incomplete: Some answers injected assumptions about broker motives.

- Low stakes can turn high stakes fast: A trader using an invented price for a large forex order could make a costly entry error within seconds.

Complex Q&A – Strategy & Multi-Step Reasoning

Here, prompts required the AI to combine market knowledge, context, and reasoning:

- “Based on this week’s EUR/USD movement and ECB commentary, what’s a likely short-term move?”

- “How would a 25bp Fed rate cut affect gold prices?”

- “Given this chart pattern, what’s the next likely candle?”

We evaluated for accuracy, completeness, risk-awareness, and reasoning clarity.

Results – Complex Q&A

Example – Macro Event Impact

- Prompt: “How would a 25bp Fed rate cut affect gold prices?”

- Claude: “A rate cut often weakens the USD, making gold cheaper for other currencies, potentially lifting prices – though reaction depends on market expectations and risk sentiment.” (Balanced, shows uncertainty)

- ChatGPT: “Gold will rise after a rate cut.” (Too absolute, ignores possibility of priced-in move)

Example – Chart Pattern

- Prompt: “Given this chart pattern (image provided), what’s the next likely move?”

- Perplexity: Correctly identified a descending triangle and noted downside probability while warning against certainty.

- Meta AI: Misidentified it as a bull flag, recommending a long position. (Completely wrong pattern recognition)

Key Takeaways – Complex Q&A

- Overconfidence is the killer: Many models removed caveats as complexity increased.

- Image-based analysis is shaky: Pattern recognition errors were frequent.

- Nuance separates safe from dangerous: Top tools acknowledged uncertainty; lower performers defaulted to clear trade calls.

Data Interpretation – Turning Numbers into (Potentially Dangerous) Advice

If asking AI a straight question can be risky, asking it to interpret real market data is like letting a stranger drive your sports car – it might go fine, but you really don’t want to find out the hard way that they don’t know how to handle a corner.

For this section, we fed each AI actual market datasets – price feeds, economic calendars, and order book snapshots – and tested how well it could:

- Extract correct numbers

- Identify trends or anomalies

- Suggest actions without overstepping into reckless certainty

Test Design

We ran three core data interpretation scenarios:

- Economic Event Impact

- Fed rate decision data, recent inflation prints, and yield curve numbers.

- Short-Term Technical Analysis

- Candlestick data (open, high, low, close, known as OHLC) for EUR/USD, gold, and S&P 500 over the last 48 hours.

- Order Book Liquidity Check

- Live snapshots from a top-tier ECN feed showing bid/ask depth, to see if AI could read market pressure.

Results – Data Interpretation

Example – Economic Event Impact

- Prompt: “Here’s the latest US CPI data (provided table). How might this affect the Fed’s next decision?”

- Perplexity: Correctly noted CPI was slightly below forecast, highlighted reduced pressure for further hikes, and linked to dovish bias – while adding that markets had partly priced it in. (Strong, nuanced)

- Gemini: Misread core CPI as headline CPI, overstated the disinflation story, and concluded a rate cut was “very likely” next meeting. (Inaccurate and misleading)

Example – Technical Data

- Prompt: “Using the supplied OHLC data for EUR/USD over the last 48 hours, identify the trend and next probable move.”

- Claude: Identified a lower high sequence and noted bearish momentum, but warned that the Asian session could bring thin liquidity reversals. (Balanced and technically accurate)

- Meta AI: Miscalculated moving averages, then claimed “strong uptrend” despite price being 0.8% lower over the period. (Mathematically wrong)

Example – Order Book Liquidity

- Prompt: “Here’s the current ECN order book snapshot for XAU/USD. What does it tell you?”

- ChatGPT: Correctly spotted heavier bid depth at $2,314 and suggested it might act as near-term support – but failed to note the thin liquidity above $2,320 that could lead to sharp moves. (Partially useful)

- Groq: Claimed “buying pressure is dominant” without acknowledging that half the bids were iceberg orders likely to vanish. (Overconfident misread)

Key Takeaways – Data Interpretation

- Math errors are common: Some models simply can’t calculate averages or percentage changes reliably.

- Good models cross-check: The best performers referenced multiple parts of the dataset instead of fixating on one number.

- Danger rises with context gaps: When an AI doesn’t know about market microstructure (e.g., iceberg orders), its advice can be worse than useless.

Trade Ideas & Execution Plans – When AI Starts Calling the Shots

If data interpretation is a slippery slope, full-blown trade ideas from AI are the cliff edge. This is where things get dangerous fast – because now, the AI isn’t just telling you what’s happening, it’s telling you what to do next.

Test Design

We built a controlled environment for this one. For each AI model, we provided:

- A market brief (trend, volatility, news context)

- Access to live or delayed OHLC data

- Our own risk parameters (max leverage, stop loss limits)

Then we asked for:

- A specific trade idea (entry, stop loss, take profit)

- Justification for the idea

- Execution plan – how to scale in/out, how to react if price moved against us

Results Table – Trade Recommendations

Example – FX Trade Idea

- Prompt: “EUR/USD is trading at 1.0895 after a weaker US retail sales print. Provide a trade idea with entry, stop loss, and take profit based on 15-min chart trends.”

- Claude: Suggested a long at 1.0898, SL at 1.0875, TP at 1.0942, citing bullish reversal patterns – but included a caveat that thin liquidity could cause false breakouts. (Responsible approach)

- Groq: Called for a long with no stop loss and an aggressive TP “near 1.1100” based solely on “upward momentum” – ignoring key resistance at 1.0945. (Dangerously reckless)

Example – Equity Index Trade Plan

- Prompt: “S&P 500 futures currently at 5298. Provide a short-term trade idea using 5-min data.”

- Perplexity: Recommended a short at 5300, SL at 5308, TP at 5280 – citing overbought RSI and declining breadth, with notes to close if VIX dropped under 12. (Well-structured, conditional)

- Meta AI: Suggested “buy now and hold” without defining risk levels, citing “seasonality and optimism” – with zero technical or macro justification. (Narrative-driven, fact-light)

Danger Patterns We Found

From all this testing, three huge red flags emerged:

- Overconfident narrative bias: We saw AI double down on ideas even when data contradicted the premise.

- Missing stop losses: Some models just didn’t bother suggesting them at all.

- Tunnel vision: Ignoring other instruments, correlated markets, or liquidity traps that could blow up the trade.

Key Takeaways – Trade Ideas & Execution

- The best models still only got market direction right ~64% of the time in our test window – which is barely tradable after costs.

- The worst models could bankrupt a reckless user in a few trades.

Ultimately, running these tests showed me that if you let AI run the show without human checks, you’re not trading – you’re gambling with a very confident stranger.

AI Portfolio Simulation & Realistic Loss Scenarios

It’s one thing to cherry-pick a few AI trade ideas and talk theory. It’s another thing to put those ideas through a realistic, rules-based simulation and see what happens when you run them like an actual trading account.

This was the moment in our testing where theory met a cold, hard P&L curve.

How We Set Up the Test

We wanted to make this as close to real trading as possible, without risking actual capital.

Here’s how we built it:

- Starting balance: $50,000

- Account type: ECN-style with 0.1 pip spreads on majors, realistic commissions

- Leverage: 1:20 (enough to be dangerous, but not insane)

- Trade execution: Simulated with live-tick replay from market data

- Test period: 4 weeks of high-volatility markets (earnings season + Fed meeting)

- Position sizing: Based on the AI’s own suggested risk parameters – if none were given, we defaulted to 2% account risk per trade

How We Fed the AI Models

Every morning before London open, we gave each AI:

- Market snapshot: key overnight moves, economic calendar, notable news headlines

- Open positions status: current P&L, margin usage, upcoming events that could impact trades

- Request for action: “Give trade ideas for today” or “Adjust/close positions”

- The AI’s responses were executed exactly as stated – no human override – to see pure AI decision-making in action.

The Simulation Results

Loss Scenarios That Shocked Us

- Event risk blindness: Meta AI stayed long GBP/USD going into a surprise Bank of England hike, turning a small profit into a -6% day in one move.

- No position trimming: Groq’s model refused to scale down winning trades ahead of NFP data, wiping out all gains for the week in minutes.

- Death by overtrading: Gemini opened multiple correlated positions (EUR/USD, GBP/USD, AUD/USD longs) – all went south together.

Our Danger Scale for Portfolio Impact

We scored each AI on a Danger Scale from 1 (safe-ish) to 10 (financial self-destruction), based on:

- Capital loss speed: How fast they could halve the account

- Drawdown depth: How much pain before any recovery

- Risk of ruin: Probability of account going to zero before recovering losses

In this test:

- Perplexity = 4/10 (tradable with caution)

- Meta AI = 10/10 (do not let near a brokerage account)

Key Takeaways – Portfolio Simulation

- Even the “best” AI tools couldn’t beat a good human swing trader over this short test period.

- Risk controls were the biggest weakness – AI could spot opportunities but not consistently protect capital.

- Without a human to veto bad ideas, AI will eventually find a way to blow up an account.

The Psychology of AI Overtrust in Trading

When we showed our test results to a few trader friends wasn’t shock at the losses. It was: “Yeah, but if you just ran it for a bit longer, it probably would’ve recovered.”

That’s the problem. AI isn’t just a tool, it’s a very convincing storyteller. And traders are wired to believe stories, especially when they’re wrapped in slick charts and confident-sounding explanations.

Why AI Feels More Trustworthy Than It Is

Our tests showed that even losing AIs sounded sure of themselves.

Meta AI gave us this gem after a 9% drawdown in two days:

“This is a short-term fluctuation – maintain current positions for optimal return.”

The human brain hears that, sees a logical sentence with economic reasoning, and wants to believe it’s rational – even though the numbers were screaming “close it now!”

Cognitive Biases at Play

- Authority Bias: We’ve been trained to trust machines in finance: algos, execution systems, backtesting tools. AI inherits that halo effect.

- Confirmation Bias: When AI agrees with our gut, it feels like extra validation, even if the trade is bad.

- Overconfidence Effect: The cleaner and more certain the answer, the more likely we are to overweight it – regardless of actual accuracy.

How This Showed Up in Our Testing

- Claude issued a losing EUR/USD trade three days in a row, each time confidently explaining why it was still the “right” call.

- ChatGPT used sophisticated reasoning to justify holding an underwater gold long – and we nearly let it run out of sheer “maybe it knows something” curiosity.

- Gemini doubled down on AUD/USD long exposure with a textbook “averaging down” argument… which promptly turned a -2% loss into -7%.

The Hidden Danger: “Invisible” Risk Creep

Even when the AI wasn’t losing big, we noticed a slow drift into higher-risk positioning:

- Opening more correlated trades

- Increasing position size subtly over time

- Holding trades longer than initially planned

This didn’t feel dangerous in the moment, because the AI framed it as “strategic” – but in real trading, this is how accounts get quietly over-leveraged until one bad day nukes them.

Mitigating AI Overtrust

From our tests, three simple rules cut risk dramatically:

- Never follow AI advice blindly: Treat it like a junior analyst; you have to fact-check.

- Impose fixed risk limits: AI should never increase position size or exposure without human approval.

- Audit P&L daily: If AI explanations start drifting away from the actual numbers, that’s your exit signal.

These are the same rules we also follow when evaluating the increasing number of brokers offering AI trading tools – an alternative to using platforms like ChatGPT, Perplexity, and so on. We start with skepticism, prioritize risk management, and follow objective data not persuasive marketing.

Final Danger Ratings for AI Trading Tools

After extensive time spent testing, hundreds of queries, and a few too many “what on earth just happened?” moments, we condensed everything into a Danger Rating for each AI model we tested.

This is based on the measured failure modes from the sections broken down in this report.

Scoring Methodology

We weighted our findings across five categories, each scored 1-5 (5 = worst):

| Category | Description |

|---|---|

| Accuracy Risk | Hallucinations, factual errors, wrong data |

| Trading Risk | P&L impact of AI-suggested trades |

| Interpretation Risk | Misreading charts, reports, or market data |

| Overtrust Risk | Likelihood of convincing users into making bad decisions |

| Practical Reliability | Speed, stability, and handling of real-time tasks |

Danger Rating Formula: Danger Rating = (Total Score ÷ 25) × 10

Scores closer to 10 = highest risk to traders.

Practical Considerations for Traders Using AI

Our tests proved one thing beyond doubt:

- AI can be a powerful assistant – but it is not a trading partner.

- If you use it without safeguards, you’re inviting expensive mistakes.

Guardrails for Traders

1. Never act on AI trading calls without verification.

- Treat AI output like a first draft, not a final decision.

- Cross-check prices, earnings, and news against a primary source (exchange data, official reports).

2. Use AI for prep, not execution

- Great uses: summarizing earnings calls, generating scenario checklists, drafting research outlines.

- Risky uses: “Should I buy/sell X now?” prompts, live trade signal generation.

3. Be alert for “confident wrong” answers

- Our highest-rated “danger” tools (Gemini, Meta AI) delivered incorrect data with maximum confidence.

- This overconfidence is more dangerous than an obvious error.

4. Avoid AI as a live market data feed

- Even the best models failed in real-time accuracy tests.

- Use direct broker APIs or market terminals for prices and news.

5. Keep logs of your AI-assisted decisions

- A timestamped record of what the AI said and what you did will help spot patterns in errors – and prevent repeat mistakes.

Bottom Line

AI in trading is a bit like a rookie trader with encyclopedic knowledge and no risk management – brilliant one moment, reckless the next.

If you treat it as a co-pilot, you might land the plane. If you hand it the controls, don’t be surprised when it flies into a mountain.

Disclaimer:

The findings in this report are based on our own tests and evaluations of AI tools in trading contexts. While we designed our process to be thorough, these results reflect our specific methodologies, use cases, and time frame.

AI systems are continually evolving, and their performance, outputs, and risks may vary across different platforms, markets, and conditions. Our conclusions should not be taken as universal or permanent.

We encourage readers to view these results as one perspective in an ongoing and rapidly changing field.